LN1

Alphabets and Strings

An alphabet is a set of symbols

String: a sequence of symbols from some alphabet

Language: a set of strings

Unary numbers alphabet

Unary number: 1 11 111...

String Operations

- Concatenation

- Reverse

- String Length

Empty String: A string with no letters is denoted: or - Substring: a subsequence of consecutive characters

- Prefix and Suffix is prefix and is suffix

- Exponent Operation: and

- The * Operation

:the set of all possible strings from alphabet - The + Operation

Languages

A language over alphabet is any subset of

Empty language or

Operations on Languages

- The usual set operations: union, intersection, difference, complement

- Reverse:

- Concatenation:

- Star-Closure (Kleene *)

All strings that can be constructed from

- Positive Closure

LN2 Deterministic Finite Automata

For every state, there is a transition for every symbol in the alphabet

Formal Definition

Deterministic Finite Automaton (DFA)

- : set of states

- : input alphabet (:the input alphabet never contains empty string)

- : transition function

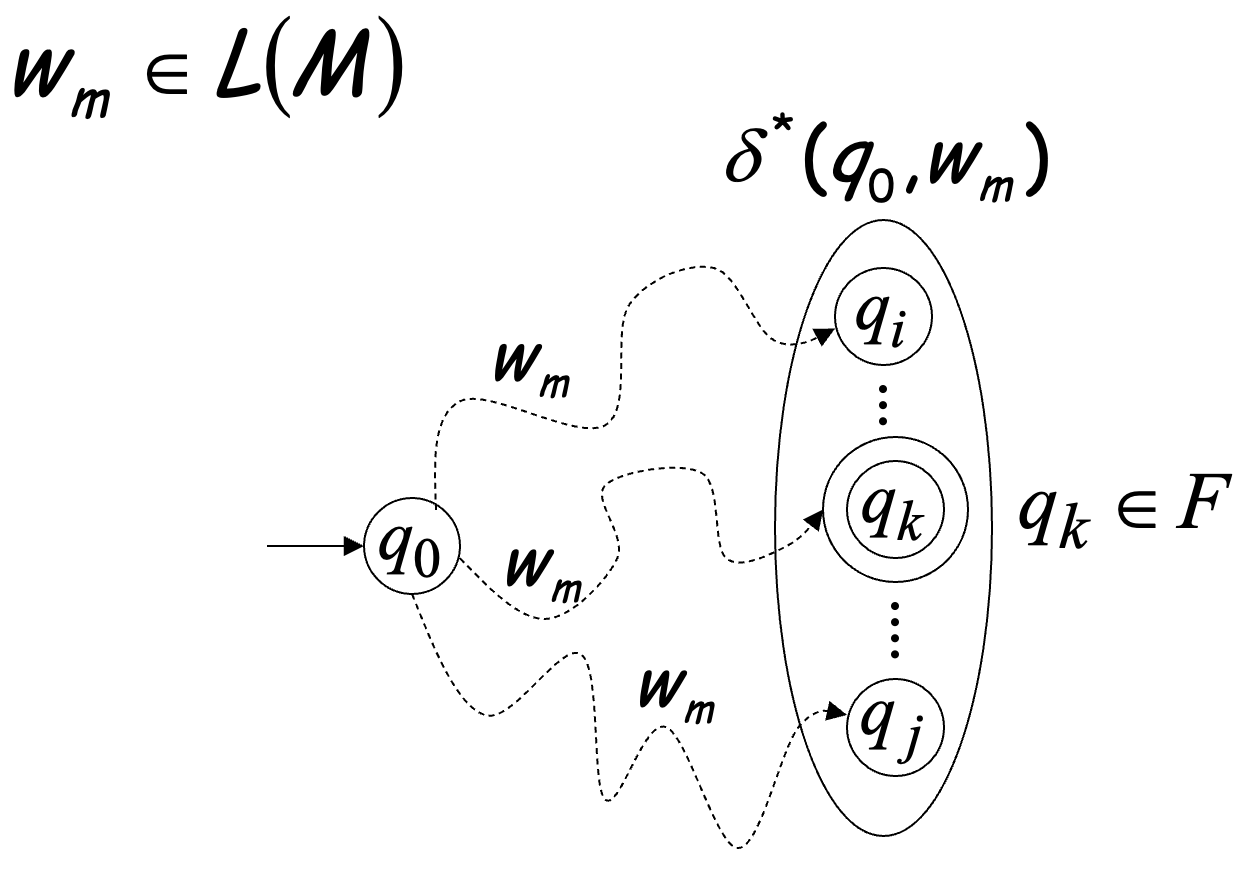

Extended Transition Function

Describes the resulting state after scanning string from state - : initial state

- : set of accepting states

Language Accepted by DFA

Language accepted by

Language rejected by

Regular Languages

A language is regular if there is a DFA that accepts it, which means

The languages accepted by all DFAs form the family of regular languages

LN3 Non-Deterministic Finite Automata

A NFA accepts a string:

if there is a computation path of the NFA that accepts the string.

all the symbols of input string are processed and the last is an accepting state2

A NFA rejects a string:

if there is no computation of the NFA that accepts the string.

For each possible computation path:

All the input is consumed and the automaton is in a non accepting state

or

The input cannot be consumed

- : transition function

(can be )

Note that for any state

Equivalence of Machines

Machine is equivalent to machine if

Languages accepted by NFAs = Regular Languages (aka Languages accepted by DFAs)

NFAs and DFAs have the same computation power, namely, they accept the same set of languages.

Proof:

- Languages accepted by NFAs Regular Languages. Because every DFA is trivially a NFA;

- Languages accepted by NFAs Regular Languages. Becasue any NFA can be converted to an equivalent DFA(We will show it later).

Conversion Procedure Steps

- Initial state of NFA is and , so that initial state of DFA is ;

- For every DFA’s state , compute in the NFA , then add transition to DFA ;

- Repeat Step 2 for every state in DFA and symbols in alphabet until no more states can be added in the DFA;

- For any DFA state , if some is accepting state in NFA, then is accepting state in DFA.

LN4 Properties of Regular Languages

We say Regular languages are closed under

- Union

- Concatenation

- Star

- Reversal

- Complement

- Intersection

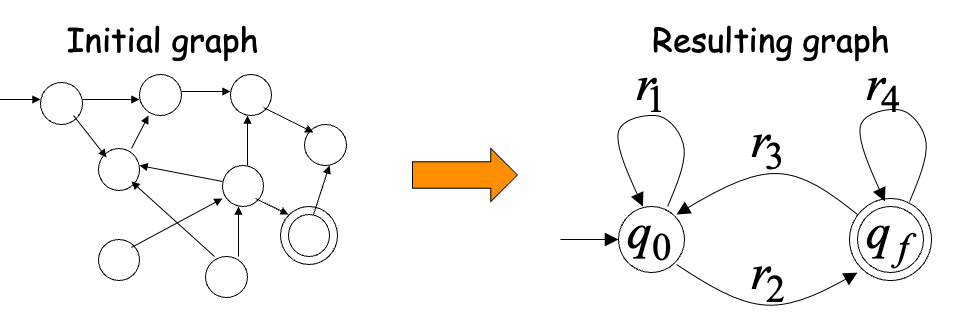

Every NFA(also include NFA without accepting state) is equivalent to a NFA which contains single accept state.

LN5 Regular Expressions

Regular Expressions

Regular expressions describe regular languages

describes the language

Primitive regular expressions:

Given regular expressions , are regular expressions

Languages of Regular Expressions

means langguage of regular expression

Equivalent Regular Expressions

Theorem:Languages Generated by Regular Expressions = Regular Languages

When we say we are given a Regular Language , we mean Language is in a standard representation (DFA, NFA, or Regular Expression)

LN6 Regular Grammars

Gramars

Grammar

- : Set of variables

- : Set of terminal symbols

- : Start variable

- : Set of Production rules

Sentential Form :A sentence that contains variables and terminals

Language of a Grammar

For a grammar with start variable

is one string of terminals

Linear Grammars

Linear Grammars: Grammars with at most one variable at the right side of a production

A Non-Linear Grammar

means number of in string

Right-Linear Grammars:

All productions have form or

Left-Linear Grammars:

All productions have form or

Regular Grammars

A regular grammar is any right-linear or left-linear grammar

Regular grammars generate regular languages

Theorem Languages Generated by Regular Grammars Regular Languages = Regular Languages

For any transition add production

For any final state add production

LN7-8 Non-regular languages (Pumping Lemma)

Non-regular languages

The Pigeonhole Principle and DFAs

Considering number of states of DFA is , for a walk of , if , which means numbers of states in walk is at least , then a state is repeated in the walk

The Pumping Lemma

Take an infinite regular language, There exists a DFA that accepts

Take string with , then, at least one state is repeated in the walk of

Take to be the first state repeated

We can write , Where corresponds to substring between first and second occurrence of

Note that , because of unique states in (Since, in no state is repeated execpt )

Since there is at least one transition in loop,

We do not care about the form of string ( may actually overlap with the paths of and )

In General, the string is accepted

Therefore,

The Pumping Lemma:

- Given a infinite regular language

- there exists an integer (critical length)

- for any string with length

- we can write

- with and

- such that

Applications of the Pumping Lemma

Every language of finite size has to be regular

Therefore, every non-regular language has to be of infinite size

Assume for contradiction that is a regular language

Since is infinite we can apply the Pumping Lemma

Let be the critical length for

We pick

We can write

Thus

But , CONTRADICTION!!!

LN9 Context-Free Languages

Context-Free Grammars

Grammar

Describes matched parentheses:

Derivation Order and Derivation Trees

| derivation | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| leftmost | ||||||

| rightmost |

They give same derivation tree

Ambiguity

A context-free grammar is ambiguous if there is a string which has:

two different derivation trees or two leftmost derivations (Two different derivation trees give two different leftmost derivations and vice-versa)

In general, ambiguity is bad and we want to remove it

Sometimes it is possible to find a non-ambiguous grammar for a language

But, in general ιt is difficult to achieve this

LN10 Simplifications of Context-Free Grammars

A Substitution Rule

Nullable Variables -production

Unit-Productions

Useless Productions

Normal Forms for Context-free Grammars

Chomsky Normal Form Each production has form: or

Conversion to Chomsky Normal Form

It is easy to find the Chomsky normal form for any context-free grammar (which doesn’t generate )

Greinbach Normal Form All productions have form:

Greinbach normal forms are very good for parsing strings (better than Chomsky Normal Forms)

However, it is difficult to find the Greinbach normal of a grammar

LN11 Properties of Context-Free languages

Closed

- Union

- Concatenation

- Star Operation

Negative Properties of Context-Free Languages (not closed)

- Intersection

- Complement

Intersection of Context-free languages and Regular Languages(Applications of Regular Closure)

The intersection of a context-free language and a regular language is a context-free language

Prove that is not context-free

Becasue is regular, is not context-free.

So it's impossible that is context-free

LN12 Pushdown Automata PDAs

Pushdown Automaton -- PDA

Initial Stack Symbol

The States

- replace:

- push:

- pop:

- no change:

PDAs are non-deterministic

Allowed non-deterministic transitions

A string is accepted if there is a computation such that:

All the input is consumed AND The last state is an accepting state

we do not care about the stack contents at the end of the accepting computation

Formalities for PDAs

Pushdown Automaton (PDA)

- state

- Input alphabet

- Stack alphabet

- Transition function

- Initial state

- Stack start symbol

- Accept states

- Current state

- Remaining input

- Current stack content

Language accepted by PDA :

LN13 PDAs Accept Context-Free Languages

Theorem: Context-Free Languages (Grammars) = Languages Accepted by PDAs

Convert Context-Free Grammars to PDAs

For each terminal in , add

For each production in , add

e.g.

PDA:

Grammar Leftmost Derivation

PDA Computation

Grammar generates string if and only if PDA accepts

Convert PDAs to Context-Free Grammars

Too long to wirte down here

LN14 DPDA Deterministic PDA

Definition

is DPDA

is not DPDA

because is not allowed in DPDA

PDAs Have More Power than DPDAs

We will show that there exists a context-free language which is not accepted by any DPDA

Which means Deterministic Context-Free Languages(DPDA) Context-Free

Languages(PDA)

e.g. is context-free but not deterministic context-free

Because if there is a DPDA accept , we will get a DPDA accepting by modifying , replacing with

The language is not context-free(we can prove this using pumping lemma later)

LN15-16 Pumping Lemma for Context-free Languages

The Pumping Lemma:

For any infinite context-free language

there exists an integer such that

for any string

we can write

with length and

and it must be that:

LN17 Turing Machines

Turing Machines are deterministic

The tap with infinite length

The head at each transition (time step):

- Reads a symbol

- Writes a symbol

- Moves Left or Right

Turing Machines are deterministic(No -transitions allowed)

No transition for input symbol is allowed

Halting

The machine halts in a state if there is no transition to follow

Accepting states have no outgoing transitions

The machine halts and accepts

Accept Input String = If machine halts in an accept state

Reject Input String = If machine halts in a non-accept state or If machine enters an infinite loop

In order to accept an input string, it is not necessary to scan all the symbols of the input string

Formal Definitions for Turing Machines

: Blank

A move

If a language is accepted by a Turing machine then we say that is:

- Turing Recognizable

- Turing Acceptable

- Recursively Enumerable

LN18 Turing’s Thesis

Turing’s thesis (1930):

Any computation carried out by mechanical means can be performed by a Turing Machine

An algorithm for a problem is a Turing Machine which solves the problem

Variations of the Turing Machine

Turing machines with:

- Stay-Option

- Semi-Infinite Tape

- Multitape

- Multidimensional

- Nondeterministic

Same Power of two machine classes:

both classes accept the same set of languages

each new class has the same power with Standard Turing Machine(accept Turing-Recognizable Languages)

Turing Machines with Stay-Option

The head can stay in the same position

L,R,S: possible head moves

- Stay-Option Machines simulate Standard Turing machines

because any standard Turing machine is also a Stay-Option machine - Standard Turing machines simulate Stay-Option machines

because for any transition we can replace it with and for every possible tape symbol

Semi-Infinite Tape

Multiple Track Tape

It is a standard Turing machine, but each tape alphabet symbol describes a pair of actual useful symbols

The head extends infinitely only to the right and Initial position is the leftmost cell. When the head moves left from the border, it returns back to leftmost position

- Standard Turing machines simulate Semi-Infinite machines

We can insert special symbol # at left of input string and add a self-loop to every state (except states with no outgoing transitions) - Semi-Infinite tape machines simulate Standard Turing machines

choose a reference point and generate a Semi-Infinite tape machine with two tracks(right part and left part)

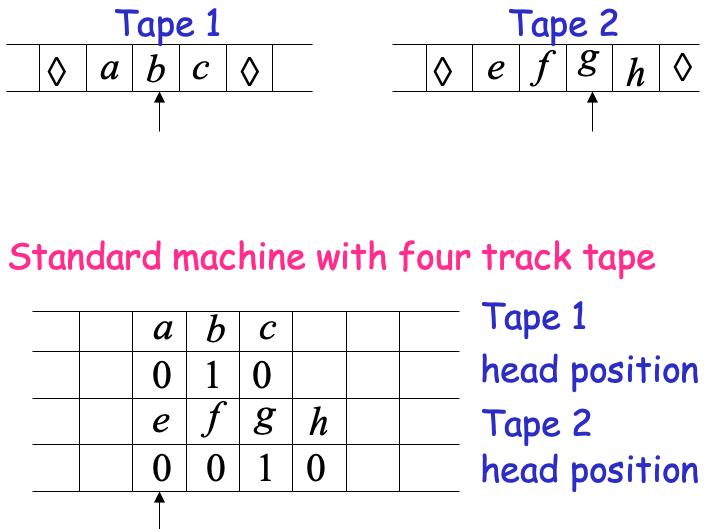

Multi-tape Turing Machines

- Multi-tape machines simulate Standard Turing machines

Just use one tape - Standard Turing machines simulate Multi-tape machines

Uses a multi-track tape to simulate the multiple tapes. A tape of the Multi-tape machine corresponds to a pair of tracks

Note that same power doesn’t imply same speed

, Standard Turing machine needs time but 2-tape machine needs time(copy to tape 2 and compare tape 1 and tape 2)

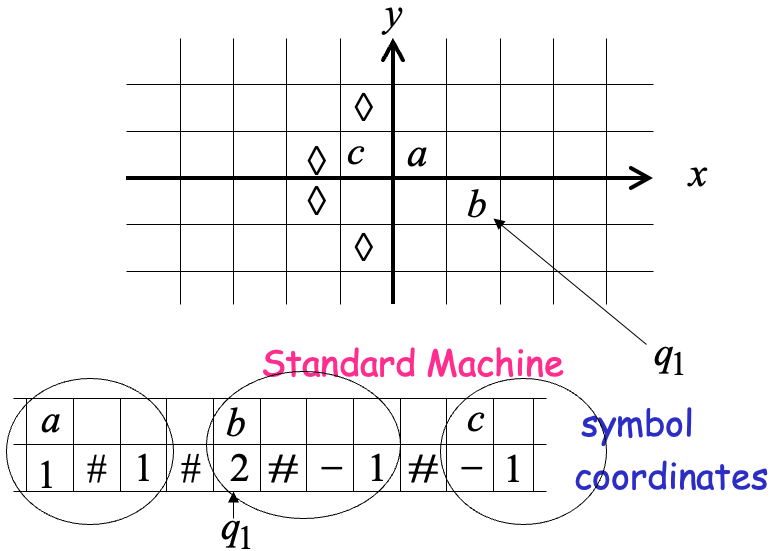

Multidimensional Turing Machines

MOVES: L,R,U,D

- Multidimensional machines simulate Standard Turing machines

Just use one dimension - Standard Turing machines simulate Multidimensional machines

Use a two track tape; Store symbols in track 1; Store coordinates in track 2

Nondeterministic Turing Machines

- Nondeterministic machines simulate Standard Turing machines

every deterministic machine is also nondeterministic - Standard (deterministic) Turing machines simulate Nondeterministic machines

Uses a 2-dimensional tape(equivalent to standard Turing machine with one tape)

LN19 Universal Turing Machine

A Universal Turing Machine

Tape 1 contents of Universal Turing Machine: binary encoding of the simulated machine which is description of (different numbers of '1's means different symbols while '0's means separators)

A Turing Machine is described with a binary string of 0’s and 1’s

Therefore, the set of Turing machines forms a language: each string of this language is the binary encoding of a Turing Machine

Tape 2 contents of Universal Turing Machine: Tapes Contents of

Tape 3 contents of Universal Turing Machine: State of

Countable Sets

There is a one to one correspondence (injection) of elements of the set to Positive integers (1,2,3,…)

e.g. The set of rational numbers is countable

Let be a set of strings (Language)

An enumerator for is a Turing Machine that generates (prints on tape) all the strings of one by one and each string is generated in finite time

Theorem: The set of all Turing Machines is countable

Proof: Any Turing Machine can be encoded with a binary string of 0’s and 1’s.

Find an enumeration procedure for the set of Turing Machine strings

Enumerator: Repeat:

- Generate the next binary string of 0’s and 1’s in proper order

- Check if the string describes a Turing Machine

if YES: print string on output tape

if NO: ignore string

Simpler Proof:

Each Turing machine binary string is mapped to the number representing its value

Uncountable Sets

If is an infinite countable set, then the powerset of is uncountable.

Since Languages accepted by Turing machines is countable but All possible languages is uncountable. There is a language not accepted by any Turing Machine.

LN20 Decidable

Decidable Languages

Turing-Acceptable=Turing-Recognizable=Recursively-enumerable

A language is decidable if there is a Turing machine (decider) which accepts and halts on every input string (Also known as recursive languages).

For any input string

If , halts in an accept state; else halts in a non-accept state.

Note that Every decidable language is Turing-Acceptable

We can convert any Turing machine to have single accept and reject states

Decider: For each input string, the computation halts in the accept or reject state

Turing Machine for : It is possible that for some input string the machine enters an infinite loop

A computational problem is decidable if the corresponding language is decidable. We also say that the problem is solvable.

Problem: Is number prime?

Corresponding language:

Decider for : On input , divide with all possible numbers between and . If any of them divides Then reject else accept.

Problem: Does DFA accpet the empty language ?

Corresponding Language:

Decider for : On input , Determine whether there is a path from the initial state to any accepting state

Problem: Does DFA accpet a finite language?

Corresponding Language:

Decider for : On input , Check if there is a walk with a cycle from the initial state to an accepting state

Check if

which is a solvable problem for DFAs:

Theorem: If a language is decidable, then its complement is decidable too

Undecidable Languages

An undecidable language has no decider: Any Turing machine that accepts does not halt on some input string

If is Turing-acceptable but is not Turing-acceptable, is undecidable such as

LN21 Undecidable

Undecidable Problems

Membership problem

is Turing-acceptable but undecidable

Halting Problem

is Turing-acceptable but undecidable

LN22 Reductions

Reductions

Computable function : There is a deterministic Turing machine which for any input string computes and writes it on the tape

Problem is reduced to problem : If we can solve problem then we can solve problem , which means there is a computable function such that

Theorem 1:

If: Language is reduced to and language is decidable

Then: is decidable